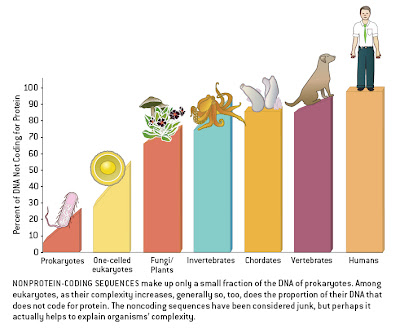

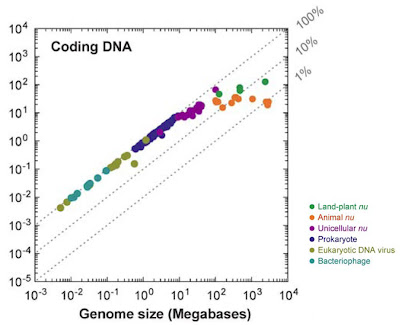

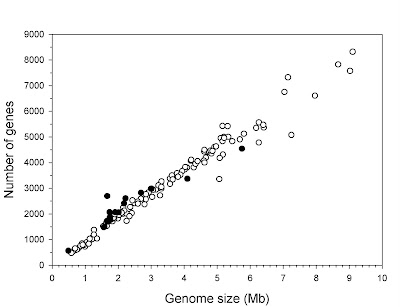

The human genome, like that of most eukaryotes, is dominated by non-coding DNA sequences. In humans, protein-coding exons constitute only about 1.5% of the total DNA sequence. The rest is made up of non-coding elements of various types, including pseudogenes (both classical and processed), introns, simple sequence repeats (microsatellites), and especially transposable elements — sequences capable of autonomous or semi-autonomous movement around, and in most cases duplication within, the genome. Endogenous retroviruses (ERVs), which are very similar to or indeed are classified as long terminal repeat (LTR) retrotransposons, represent one type of transposable element within Class I (elements that use an RNA intermediate during transposition; Class II elements transpose directly from DNA to DNA by cut-and-paste mechanisms). Roughly 8% of the human genome is represented by ERVs, which are descendants of former exogenous retroviruses that became incorporated into the germline genome.

It seems that no discussion about non-coding DNA is complete without stating that until recently it was all dismissed as useless junk. This claim is demonstrably false, but that does not render it uncommon. Some scientists did indeed characterize non-coding DNA as mostly useless, but finding references to this effect that do not also make explicit allowances for potential functions in some non-coding regions is challenging. Even authors such as Ohno and Comings, who first used the term “junk DNA”, noted that this did not imply a total lack of function. In fact, for much of the early period following the discovery of non-coding DNA, there was plentiful speculation about what this non-coding DNA must be doing — and it must be doing something, many authors argued, or else it would have been eliminated by natural selection. (Hence the fallacy involved in claiming that “Darwinism” prevented people from considering functions for non-coding regions within the genome).

Some authors rejected this automatic assumption of function, and argued instead that mechanisms of non-coding DNA accumulation — such as the accretion of pseudogenes following duplication (“junk DNA” sensu stricto) or insertions of transposable elements (“selfish DNA”) — could account for the presence of so much non-coding material without appeals to organism-level functions. However, the originators of such ideas often were careful to note that this did not preclude some portions of non-coding DNA from taking on functions, especially in gene regulation [Function, non-function, some function: a brief history of junk DNA].

There are lots of examples of particular transposable elements, which probably began as parasitic sequences, becoming co-opted into integral roles within the host genome. This process has played an important role in several major transitions during the macroevolutionary history of lineages such as our own. There is a large and growing literature on this topic, but reviewing this is beyond the scope of this post (see chapter 11 in The Evolution of the Genome for some examples). The present post will focus on only one recent case that was published this month in the Proceedings of the National Academy of Sciences of the USA by Ting Wang, David Haussler, and colleagues which focuses on the role of ERVs in the evolution of a key human gene regulatory system.

Here is the abstract from their paper (which is open access and is available here):

Species-specific endogenous retroviruses shape the transcriptional network of the human tumor suppressor protein p53

The evolutionary forces that establish and hone target gene networks of transcription factors are largely unknown. Transposition of retroelements may play a role, but its global importance, beyond a few well described examples for isolated genes, is not clear. We report that LTR class I endogenous retrovirus (ERV) retroelements impact considerably the transcriptional network of human tumor suppressor protein p53. A total of 1,509 of

319,000 human ERV LTR regions have a near-perfect p53 DNA binding site. The LTR10 and MER61 families are particularly enriched for copies with a p53 site. These ERV families are primate-specific and transposed actively near the time when the New World and Old World monkey lineages split. Other mammalian species lack these p53 response elements. Analysis of published genomewide ChIP data for p53 indicates that more than one-third of identified p53 binding sites are accounted for by ERV copies with a p53 site. ChIP and expression studies for individual genes indicate that human ERV p53 sites are likely part of the p53 transcriptional program and direct regulation of p53 target genes. These results demonstrate how retroelements can significantly shape the regulatory network of a transcription factor in a species-specific manner.

The TP53 gene is a “master control gene” — a sequence whose product (“protein 53”, or “p53“) is a transcription factor that binds to DNA and regulates the expression of other genes, including ones involved in DNA repair, cell cycle regulation, and programmed cell death (apoptosis). It is so important that it has been dubbed “the guardian of the genome”. Mutations in this gene can be highly detrimental: the “T” in TP53 stands for tumor, and mutations in this gene are often associated with cancers. This includes many smoking-related cancers.

The authors of this study report that particular ERVs contain sites to which the p53 protein binds. As a result of past retrotransposition, these ERVs tend to be distributed in various locations in the genome. This makes it possible for the p53 protein to bind not just at one site, but at sites dispersed in different regions, and therefore in proximity to a variety of other genes. It is this distributed network of binding sites that allows p53 to regulate so many other genes in its role as genome guardian. And this is only possible because an ERV with a site to which the p53 protein is capable of binding inserted into the genome of an early primate ancestor some 40 million years ago, made copies of itself throughout the genome, and then became useful as a source of binding sites. This is classic co-option (exaptation) at the genomic level, and represents the very same kind of explanation that Darwin himself offered for the evolution of complex structures at the organismal scale.

While this is a truly interesting discovery that sheds even more light on the complex history of the genome, it also highlights some important points that I have tried to make on this blog. First, this applies to only a fraction of non-coding DNA. Only about 8% of the genome is made up of ERVs, and, of these, only 1,509 of 319,000 copies (0.5%) include the relevant binding site. About 90% of the ERVs are represented only by “solo LTRs”, the long repeats at the end that remain after the rest of the element was deleted. Moreover, several ERVs have been implicated in autoimmune diseases. Thus, not only is only a small fraction likely to be involved in gene regulatory networks such as that of TP53, others are clearly maladaptive from the perspective of the host genome.

The evolution of the genome is a complex process involving multiple types of elements and interactions at several levels of organization. While very few authors ever claimed that all non-coding DNA was totally without function, it is certainly the case that non-coding sequences are worthy of the new-found attention that they have received from the genomics community. Let us hope that this will include more integration with evolutionary biology than has been evident in the past, as it clearly requires an appreciation of both complexity and history.

_________

ps: The press release from UC Santa Cruz by Karen Schmidt is quite good (notwithstanding the mandatory “it was dismissed as junk” line).